About me

My name is Gang Zhou, and I am currently pursuing a Ph.D. at the School of Artificial Intelligence, Beijing University of Posts and Telecommunications, with an expected graduation year of 2027.

Research

My research interests include trustworthy multimodal retrieval and vision-language models.* denotes equal contributions.

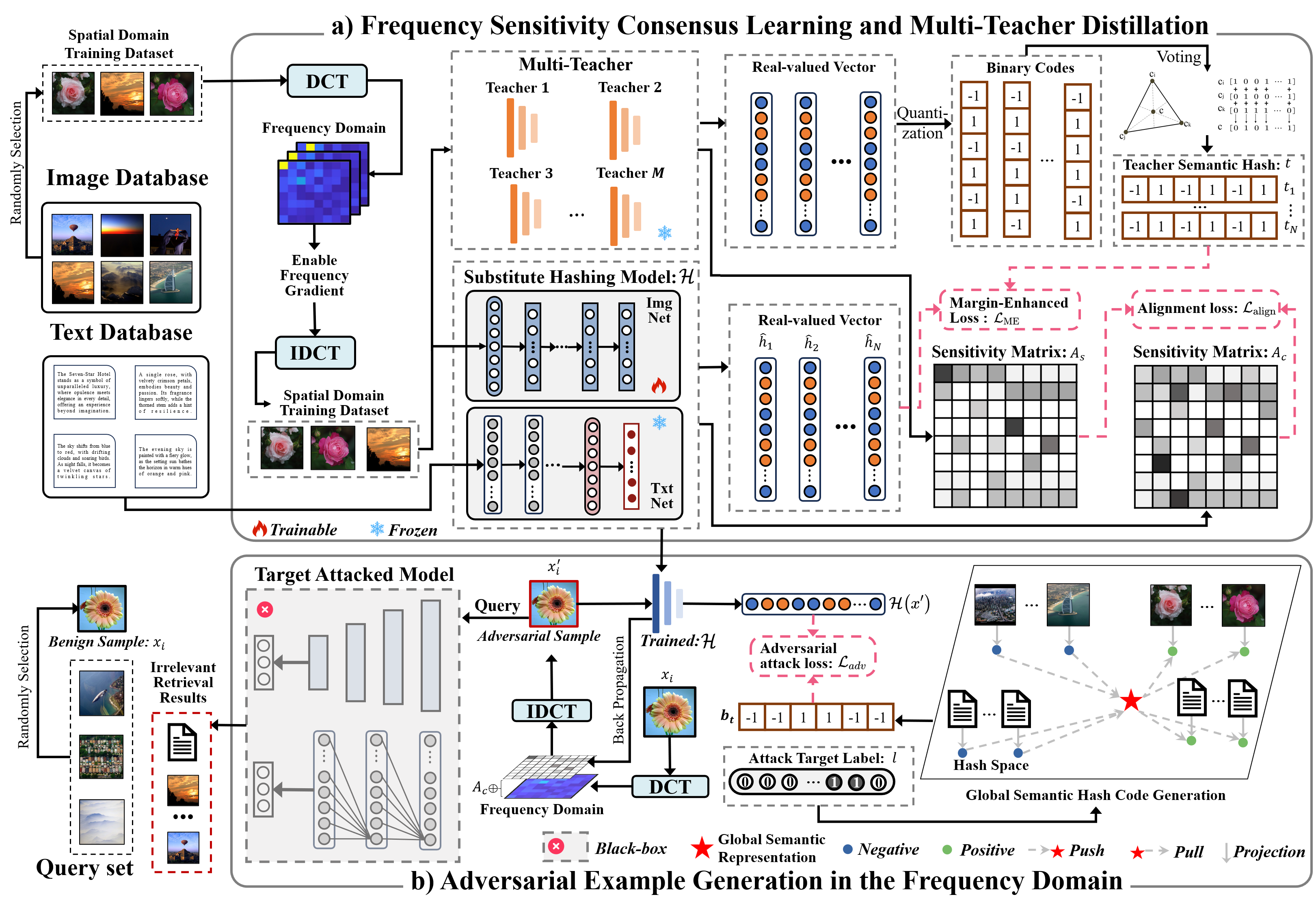

We propose FACH, a frequency domain adversarial attack method for deep cross-modal hashing retrieval systems, which combines low-frequency masking and multi-teacher gradient fusion to reveal the vulnerabilities of deep hashing models in the frequency domain. Experimental results show that FACH significantly outperforms existing transfer attack methods, enhancing the transferability and effectiveness of adversarial attacks.

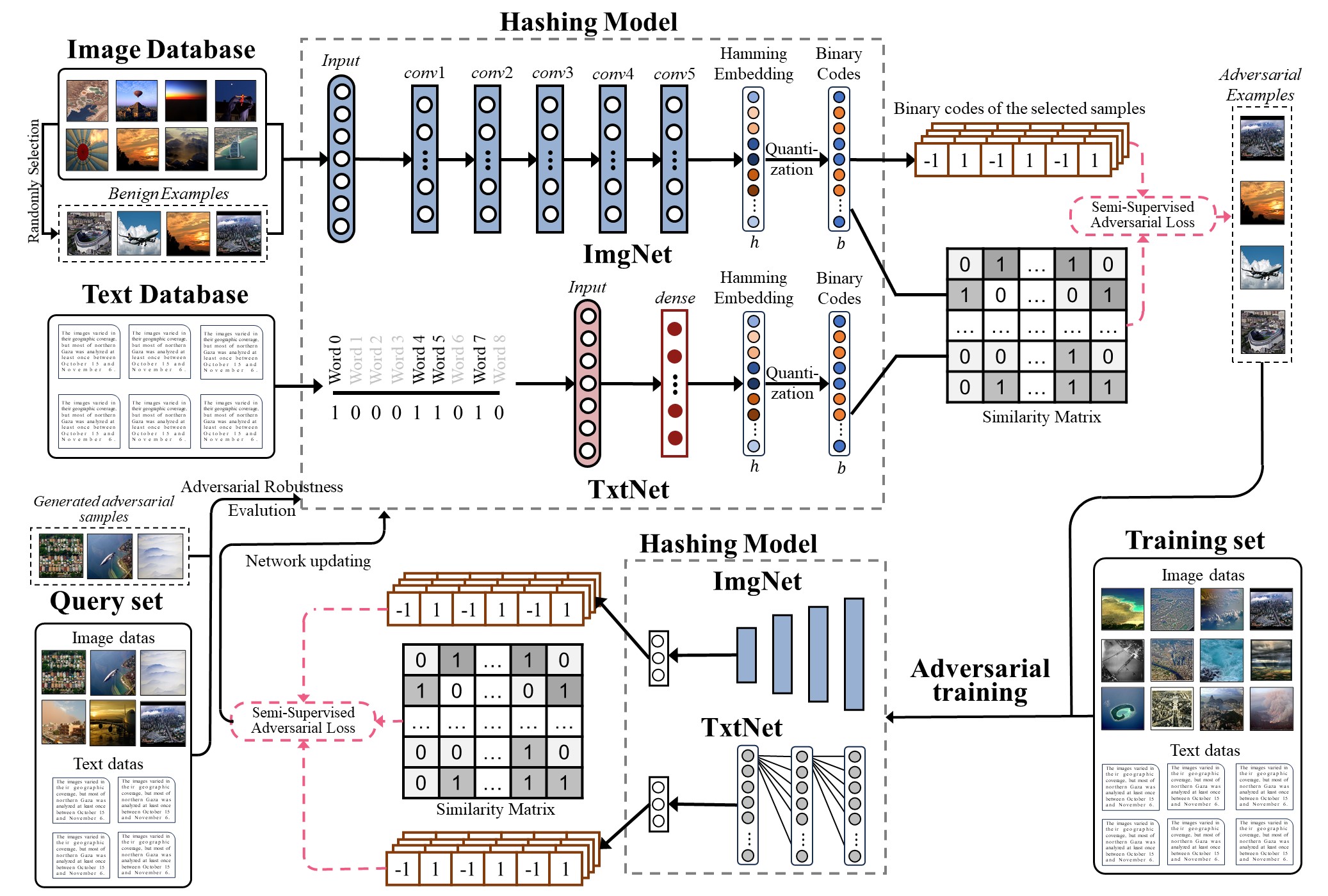

We propose DSARH, an end-to-end adversarial training framework that enhances the robustness of deep hashing models for retrieval tasks. By integrating adversarial perturbations with hash code learning and similarity matrices, SAAT outperforms existing methods in cross-modal and image retrieval tasks while maintaining robustness against various attacks.

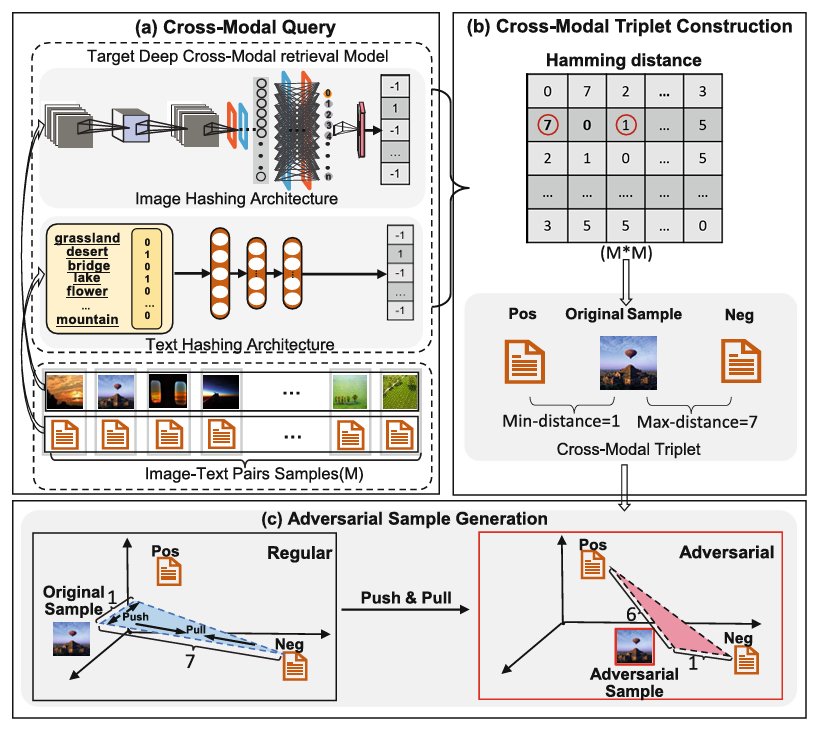

We propose BACH, an adversarial attack method for deep cross-modal hashing retrieval (DCMHR) models in black-box settings. By incorporating Random Gradient-Free Estimation (RGF) into deep hashing attacks, BACH generates effective adversarial samples without prior knowledge of the target model. Experiments show that BACH achieves attack success rates comparable to white-box attacks.

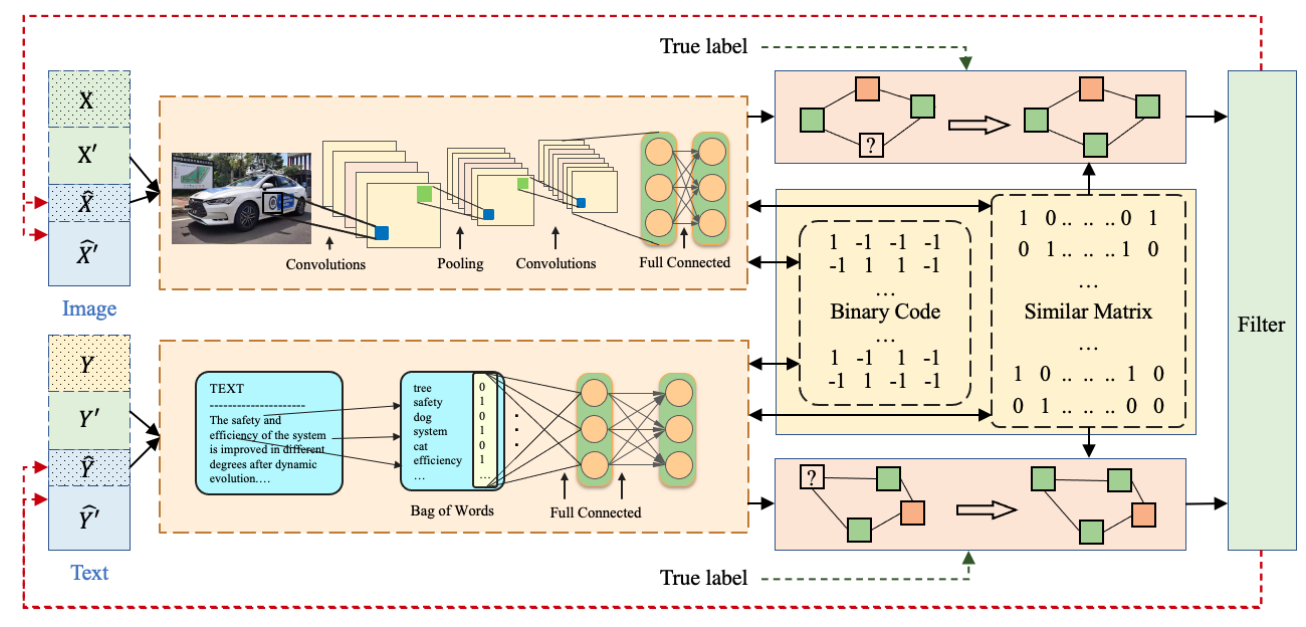

We propose STCH, a self-training-based cross-modal hashing framework designed to address semi-supervised and semi-paired problems. The framework leverages graph neural networks to capture inter-modality similarities and generate pseudo-labels, which are then refined using a heuristic filter to enhance label consistency. Through an alternating learning strategy for self-training, STCH outperforms existing methods in cross-modal hashing retrieval tasks.